Ensuring AI and Large Language Models Tell the Truth

October 2023

·

4 min read

A Technological Problem…

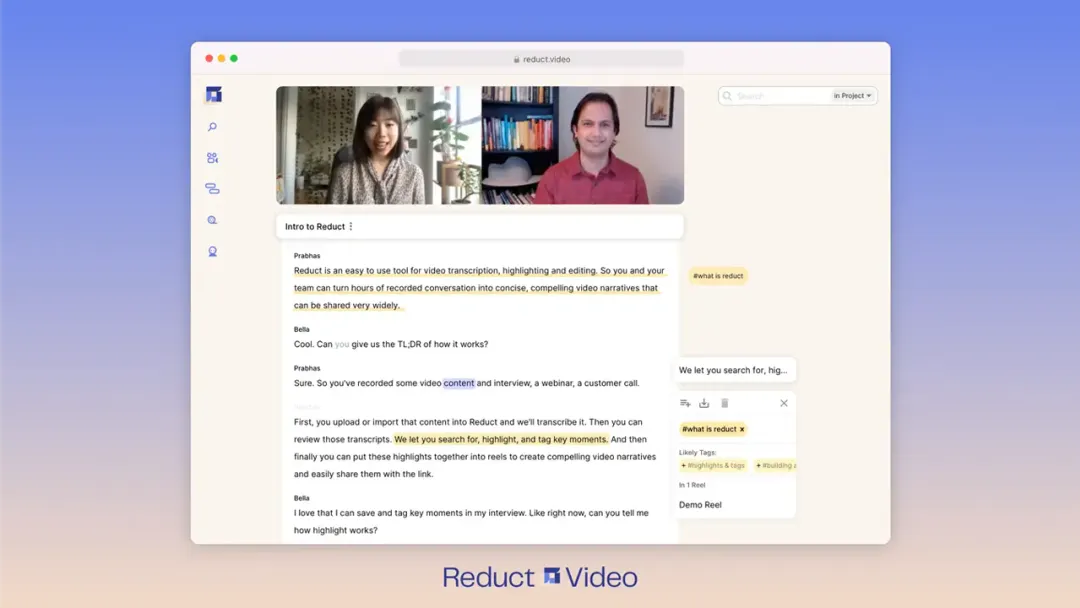

Managing many hours of video is difficult, and we’re always extending Reduct’s functionality to make it easier. Recently our customers have been asking: how are we integrating modern AI tools to help?

Generative large language models like GPT are a powerful new option for dealing with any type of language data. The possibilities they open up are tremendously exciting.

But they have a well-known weakness: they can lie.

If you ask a generative LLM a question, it doesn’t have innate knowledge of the answer. Instead, it builds up an answer based on how countless humans have used words in response to similar prompts. Often these answers are very good—and unquestionably impressive. However, the LLM has no true understanding of the content, at least as we humans "understand". Its answer comes from an enormous number of dice rolls—very sophisticated dice rolls, but nonetheless, it’s not uncommon for models like GPT to offer nonsense with complete confidence. Or, often worse, something that looks reasonable, but is incorrect.

State-of-the-art AI already underlies Reduct in its transcription and alignment technologies, but it’s also important to us to bring you the cutting-edge assistive power and convenience that generative LLMs can provide. It’s against everything we believe at Reduct, however, to build a tool that might cause people to understand their data less well.

This led us to the question: How can we leverage the power of LLMs to answer user needs, without allowing them to distance our users from context, provenance, and truth?

The Reduct Ethos

We spend a lot of time at Reduct thinking through our decisions on how to build technology. The tools we choose to develop will affect our users’ abilities to properly work with and contextualize their data, and we take that responsibility seriously.

To deal with the unreliability of LLM results, we’ve worked from the following philosophical cornerstones:

-

Real outputs are always real. In other words, any time we can return actual pieces of the transcript or clips of video as useful results, we aim for that, rather than returning raw LLM-generated content. This helps ensure against showing LLM "hallucinations."

-

Context and transparency are key. We don’t want an LLM to act as a "wall" between the user and their data; we want the user to be able to clearly see the provenance of whatever results they’re receiving.

-

Keep the user in control The user needs to be able to be the ultimate judge, and have full control over correcting any computer-generated output — which they can only do if they can always see the surrounding context.

-

Product development is a series of active choices. The choices we make on what features to build — and how to build them — are not neutral ones, and there isn’t a single default path any given product takes. From the infinite features we might develop for our customers, we want to make the ones that will be powerful, useful, and help you be more connected with your data.

Our Development Approach

In this post we won’t yet delve into the technical detail about how we’ve approached our AI processes given the above philosophies. But here’s a bird’s eye view of some of the ways our philosophies have guided our work integrating modern AI into Reduct.

-

LLMs as intermediate steps or in combination. Under the hood, we’ve discovered we can leverage the power of LLMs with far more reliability if we make them one layer among other techniques, or constrain them with other checks to make sure they give results that make sense.

-

Privileging Reduct literals. We strongly lean toward answering user needs via "literals" — that is, having tools that provide answers through highlights, tags, clips, reels, or other means of rendering actual pieces of transcript or video. This guards against some of the biggest pitfalls of generative LLMs: when the LLM produces a result, we validate this against the source material, preventing any hallucinated results.

-

One click to context. Sometimes our users want analytical assistance that isn’t easily provided by sorting, searching, or classifying Reduct literals, as powerful as that can be. For more open-ended analysis, we always try to keep the results no more than a click away from their citations and context within the transcript.

-

Users should know what they’re getting. We’re not the sort of company who will give you a lot of flashy AI and try to fool you into thinking it’s more dependable than it is. It’s important to us to make clear in the interface what style of results you’re getting, and if we’re not confident enough in a feature, to place it clearly in an experimental playground until we’ve convinced ourselves we have it right.

-

Opening the black box into explainability. It’s a well-known truism that the neural networks beneath modern LLMs are "black boxes", i.e., that we can’t know why the computer’s making a decision, which exacerbates the unreliability problem. But we’re questioning that truism. We’ve had success at pulling information directly from an LLM’s structure, and we’re eager to keep using similar techniques to give us stronger knowledge about an LLM’s results.

-

User choice, feedback, and editing. We’ve long prioritized user edits and control. We always want users to be able to make any desired changes so that the app remains pleasantly time-saving, and our AI tools are no different. Our emphasis on Reduct literals also enables us to have a very powerful interface for users to work directly with any outputs.

Some of these are decisions that affect the design of our interface and how we present different tools to you; some are choices you’ll never witness directly when using the app as they occur within our back-end code as you interact with Reduct.

In all cases, you can be sure that we’ve been thoughtful and considerate about the decisions we’re making. We want to ensure our AI tools will never hide your data from you nor dictate your analysis, while simultaneously making your day-to-day work as smooth and stress-free as possible.

Stay tuned for more about how we’re implementing AI in the app.